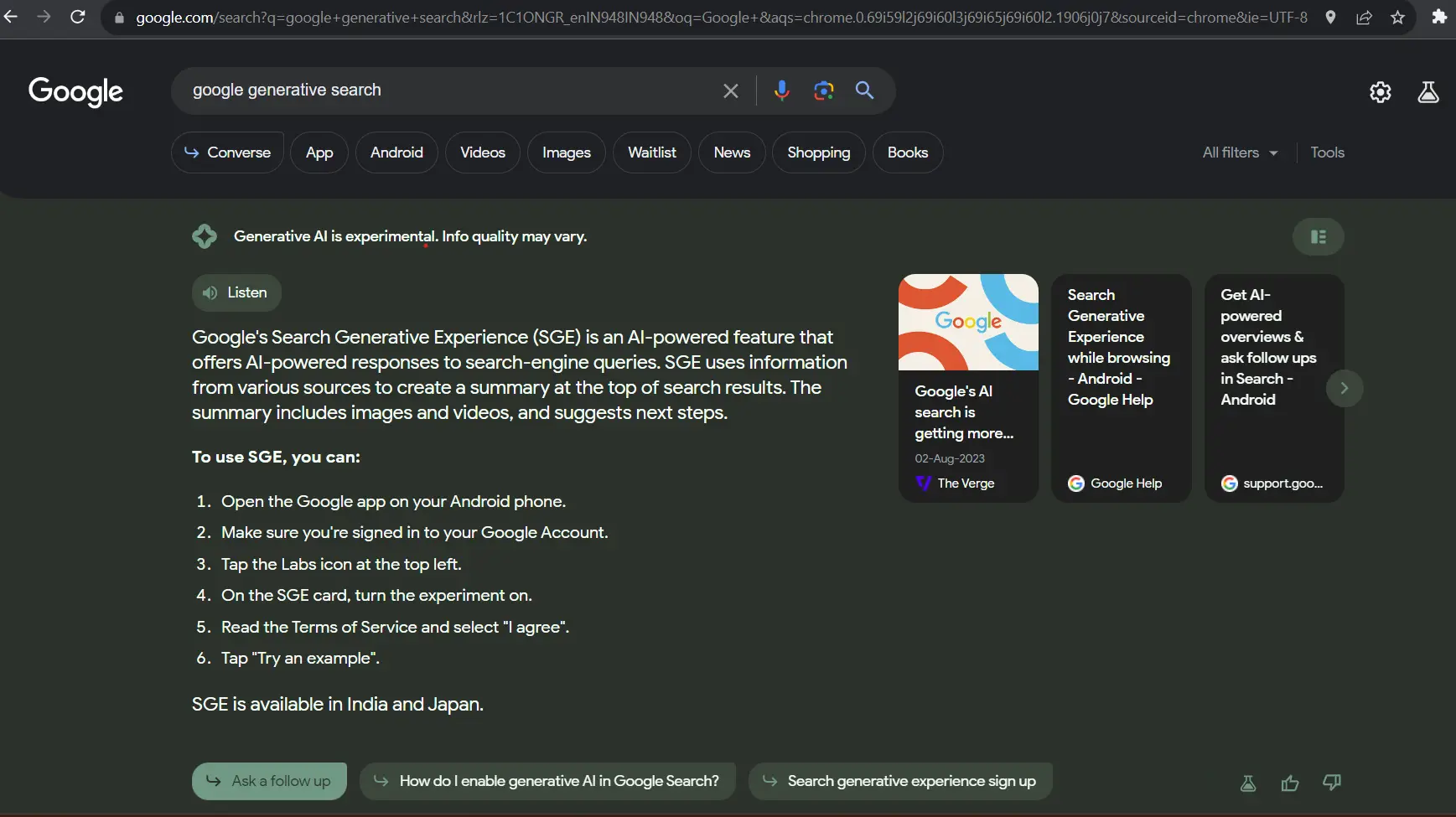

Google's generative AI models, Gemini 1.5 Pro and 1.5 Flash, have been marketed as revolutionary tools capable of processing and analyzing vast amounts of data. Promises have been made about their ability to summarize lengthy documents and search through extensive video footage, showcasing the models' “long context” capabilities. However, recent research calls these claims into question, suggesting that Gemini’s abilities may be more limited than advertised.

The Promise of Long Context

One of the key selling points for the Gemini models is their context window, which refers to the amount of input data the models can consider before generating an output. The latest versions of Gemini can handle upwards of 2 million tokens, which is roughly equivalent to 1.4 million words, two hours of video, or 22 hours of audio. This capability ostensibly allows the models to process and understand extremely large datasets, potentially revolutionizing fields such as data analysis, document summarization, and video content search.

The Reality: Mixed Results

Despite these impressive specifications, two separate studies have raised significant concerns about the actual performance of the Gemini models. Researchers aimed to test the models' ability to comprehend and analyze extensive datasets, including works as lengthy as “War and Peace.” The findings were disappointing: in a series of document-based tests, Gemini 1.5 Pro and 1.5 Flash only answered questions correctly about 40% to 50% of the time.

Marzena Karpinska, a postdoctoral researcher at UMass Amherst and co-author of one of the studies, commented on these findings. "While models like Gemini 1.5 Pro can technically process long contexts, we have seen many cases indicating that the models don’t actually ‘understand’ the content."

Struggling with Comprehension

The issue appears to lie not in the ability of Gemini models to process large amounts of data but in their ability to understand and make sense of it. This discrepancy points to a broader challenge in AI development: enabling machines to not just parse but also comprehend complex and lengthy inputs.

In practical terms, this means that while Gemini can technically handle large datasets, its utility in real-world applications might be limited. For instance, when tasked with summarizing a hundred-page document or searching for specific scenes within a film, the models might generate outputs that are incomplete or inaccurate, reducing their effectiveness for users who rely on precise data analysis.

The Road Ahead

These findings underscore the ongoing challenges in the field of AI and natural language processing. While the technology has made significant strides, the gap between what is technically possible and what is practically useful remains substantial. For businesses and researchers looking to leverage AI for data-heavy tasks, this means continued reliance on traditional methods or supplementary tools to ensure accuracy and comprehensiveness.

For Google, the mixed performance of Gemini highlights the importance of tempering expectations and continuing to refine their models. As AI technology evolves, the hope is that future iterations will not only process large datasets but also understand them more effectively, bridging the current gap between potential and performance.

Conclusion

Google's Gemini 1.5 Pro and 1.5 Flash models represent a significant advancement in AI's ability to handle long contexts. However, recent studies indicate that these models fall short of their promised capabilities, particularly in terms of comprehension and accuracy. As the field progresses, ongoing research and development will be crucial to realizing the full potential of AI in data analysis and beyond. For now, users should approach these tools with cautious optimism, recognizing both their potential and their current limitations.

Add a Comment: